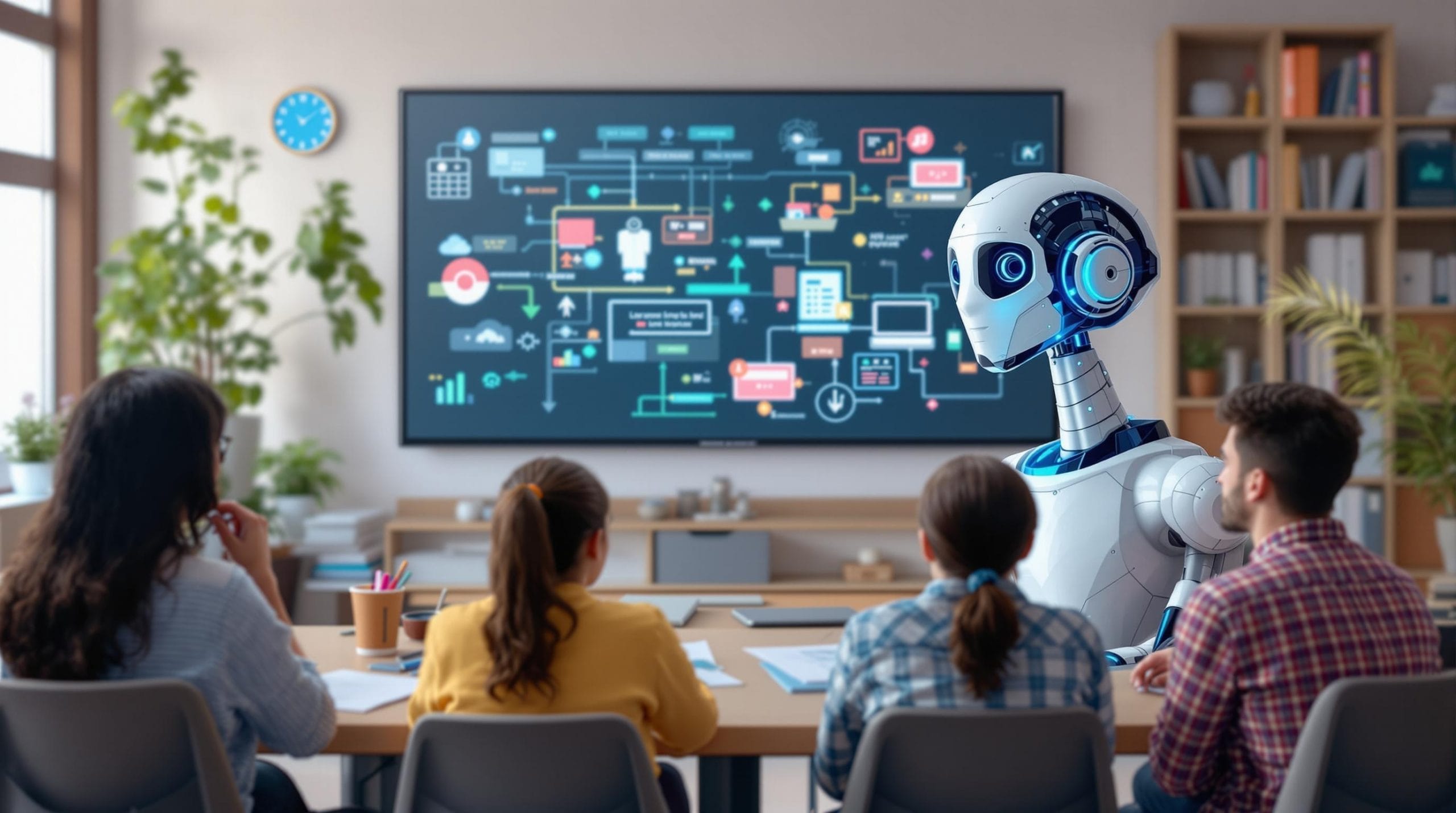

As artificial intelligence transforms educational landscapes worldwide, the key challenge isn’t preventing AI use—it’s teaching students and educators how to harness these tools ethically and effectively. With 86% of students globally already using AI for learning and 60% of teachers incorporating AI into their regular routines, the conversation has shifted from prohibition to purposeful integration that maintains academic integrity while enhancing learning outcomes.

Key Takeaways

- Students are already using AI extensively – 86% globally and 54% weekly – making educator guidance essential rather than optional

- Ethical frameworks should position AI as a thinking partner that supports drafting and feedback while requiring original reasoning and proper attribution

- A 4-question decision framework helps determine appropriate AI use: Does this replace original thinking? Is it allowed? Can I verify outputs? Will I disclose usage?

- Process-oriented assessment designs discourage shortcuts through staged submissions, oral defenses, and local context prompts

- Teachers can reinvest time saved from AI-assisted admin tasks (up to 44%) into high-impact activities like feedback and student conferencing

The Reality Check: Your Students Are Already Using AI

The statistics paint a clear picture of widespread adoption across educational levels. According to Engageli’s 2025 research, 86% of students globally use AI in their studies, with 54% using it weekly and nearly 1 in 4 using it daily. Among U.S. teens specifically, Pew Research Center found that about one-in-five who’ve heard of ChatGPT have used it for schoolwork.

Teachers aren’t far behind in adoption rates. 60% of educators have incorporated AI into their regular routines, with common applications including research and content gathering (44%), lesson planning (38%), summarizing (38%), and materials creation (37%). Those using AI for administrative tasks report significant efficiency gains, with 44% time savings on research, lesson planning, and material creation according to recent surveys.

The evidence for AI’s learning impact is compelling. A meta-analysis in Nature found that ChatGPT integration produced an effect size of g ≈ 0.867 on learning performance, substantially larger than traditional AI assessment tools. This suggests that when implemented thoughtfully, AI for learning can produce measurable academic gains.

Establishing an Ethical Framework: Learning With AI, Not From It

The foundation of successful AI integration rests on positioning these tools as “thinking partners” rather than replacement systems. The U.S. Department of Education emphasizes AI’s potential to personalize learning and reduce teacher workload while requiring ethical guardrails and professional judgment through human-in-the-loop workflows.

Creating explicit classroom policies helps students understand boundaries. Allowed uses should include idea generation, outline creation, code commenting, and language support. Prohibited uses encompass submitting AI-generated final work without attribution and fabricating citations or data.

Transparency becomes crucial through disclosure statements like: “I used ChatGPT to brainstorm an outline and refine grammar; all analysis and final arguments are my own.” Students should include AI usage logs or prompts as appendices when submitting graded work.

**Allowed vs. Not Allowed Framework:**

– **Green Zone**: Brainstorming, outlining, grammar checking, explaining concepts

– **Yellow Zone**: Draft critique, quiz generation (with verification), research summaries

– **Red Zone**: Final work generation, citation fabrication, submitting unattributed AI content

A template disclosure statement might read: “AI Tool Used: [Tool Name], Purpose: [Brief description], Verification: [Sources checked], Original Contribution: [What you added/analyzed]”

A Practical Decision Framework: Four Questions for Ethical AI Use

Before any AI interaction, students should apply this 4-question test:

1. Does this replace my original thinking?

2. Is this use allowed by course policy?

3. Can I fully verify outputs with credible sources?

4. Will I disclose and cite this usage appropriately?

The “source-first” rule requires students to collect and cite at least two credible sources before prompting. AI may help summarize or explain those sources but shouldn’t invent new facts or claims.

Implementing a “reasoned revision” process creates accountability: students submit an original draft → receive AI critique → produce human revision with tracked changes and reflection paragraph describing what was accepted or rejected from AI suggestions.

This approach aligns with research showing that ChatGPT for students works best when combined with verification processes that maintain academic rigor while leveraging AI’s analytical capabilities.

Core Classroom Applications With Ready-to-Use Prompts

**Lesson Planning Efficiency:**

Teachers can generate standards-aligned content while maintaining local relevance. Try this prompt: “You’re planning a 50-minute lesson for Grade 8 on photosynthesis aligned to NGSS MS-LS1-6. Propose 3 learning objectives, a 10-minute activator, 2 differentiation strategies (ELL and advanced), and a 5-question exit ticket keyed to objectives.”

**Formative Feedback Support:**

AI can provide criterion-referenced feedback against rubrics, which teachers then moderate and enhance. Use: “Evaluate this draft against the rubric (paste). Identify 3 strengths, 3 prioritized improvements with examples, and 2 revision tasks. Don’t rewrite; focus on guidance.”

**Writing Development:**

Support students through brainstorming, outlining, and thesis refinement while prohibiting final-surface polish that masks plagiarism. Require drafts and revision history to track authentic development.

**Code Learning Support:**

Help students understand programming concepts with: “Explain this function line by line, then propose 2 unit tests covering edge cases.” Students must write and annotate final code themselves.

These applications reflect how to use AI in education effectively—as study tools for college students and younger learners that scaffold learning rather than shortcut it.

Assessment Designs That Discourage AI Shortcuts

Process-oriented assessment values thinking over final products. Structure assignments as: proposal → annotated bibliography → outline → draft → reflection, grading each stage to emphasize intellectual development.

**Oral defenses and explanations** validate understanding beyond written submissions. Students explain their reasoning, defend choices, and demonstrate mastery through conversation.

**Local context prompts** resist generic AI responses. Instead of “Analyze leadership styles,” try “Analyze leadership approaches used by our school’s student government during last month’s budget discussions, incorporating interview data from three council members.”

**Transfer tasks** emphasize applying concepts to novel contexts rather than recall. This approach recognizes AI as a study partner while ensuring students develop independent analytical skills.

These anti-shortcutting strategies work because they require sustained engagement with material and personal synthesis that AI cannot replicate authentically.

Teaching Students Critical AI Verification Skills

Students must learn to recognize and correct AI hallucinations by cross-checking outputs against textbooks, peer-reviewed articles, and course materials before acceptance.

**Citation fluency** becomes essential in the AI era. Students should cite sources found independently, never cite AI models as sources of facts, but may acknowledge AI as a tool used in their process.

**5-Step Fact-Checking Protocol:**

1. Note any factual claims in AI output

2. Cross-reference with course readings

3. Verify through credible databases

4. Flag unverifiable information

5. Document verification sources

Promoting metacognitive reflection helps students understand their learning process. Use prompts like: “What did AI help clarify versus what required your own reasoning? How did you decide which AI suggestions to accept or reject?”

These verification skills represent core components of learning with artificial intelligence that students will need throughout their academic and professional careers.

Safeguarding Privacy, Equity, and Academic Integrity

**Data protection** requires following district guidance and avoiding personally identifiable information in AI tools without proper data processing agreements. Prefer institutionally provisioned tools when available.

**Accessibility enhancement** represents one of AI’s strongest educational applications. Use AI for language support, reading-level adjustments, and alternative formats while maintaining academic rigor.

**Equity considerations** demand ensuring device and connectivity access while offering non-AI pathways for graded tasks. No student should be disadvantaged by lacking access to AI tools or internet connectivity.

**Detection tool limitations** require caution due to false positives. Prioritize process artifacts and oral checks over automated detection for suspected academic integrity violations.

This balanced approach acknowledges that AI integration must serve all learners equitably while maintaining educational standards.

Ready-to-Implement Policies and Classroom Norms

**Syllabus AI Policy** should specify permitted uses, disclosure requirements, verification expectations, and consequences tied to existing academic integrity policies.

**Traffic Light Classification** provides clear guidance:

– **Green**: Brainstorming, outlining, concept explanation

– **Yellow**: Draft critique, quiz generation with verification

– **Red**: Final work generation, citation fabrication

**Time Reallocation Strategy** involves reinvesting the 44% time savings from AI-assisted admin tasks into student conferencing, timely feedback, and small-group instruction.

The global EdTech market’s projected growth to $404B by 2025 with 16.3% CAGR indicates that AI fluency represents a durable skill students will need beyond school.

**Sample Policy Statement:** “AI tools may be used for brainstorming and initial feedback but not for final content generation. All AI use must be disclosed with usage logs. Students remain responsible for accuracy, originality, and proper citation of all sources.”

This approach reflects productive educational practices that balance innovation with academic standards.

Building the Future of Education Through Ethical AI Integration

The future of education isn’t about choosing between human instruction and artificial intelligence—it’s about thoughtful integration that amplifies learning while preserving academic integrity. **Prompts for learning** become as important as traditional study skills, requiring explicit instruction in verification, disclosure, and critical evaluation.

Successful implementation requires recognizing that AI serves best as a **study partner** that supports but doesn’t replace student thinking. When students learn to use these tools transparently and ethically, they develop both subject knowledge and digital literacy skills essential for their futures.

The conversation has shifted from whether students will use AI to how we’ll teach them to use it responsibly. By establishing clear frameworks, providing practical tools, and maintaining focus on learning outcomes, educators can harness AI’s potential while upholding the values that make education meaningful.

**Sources**